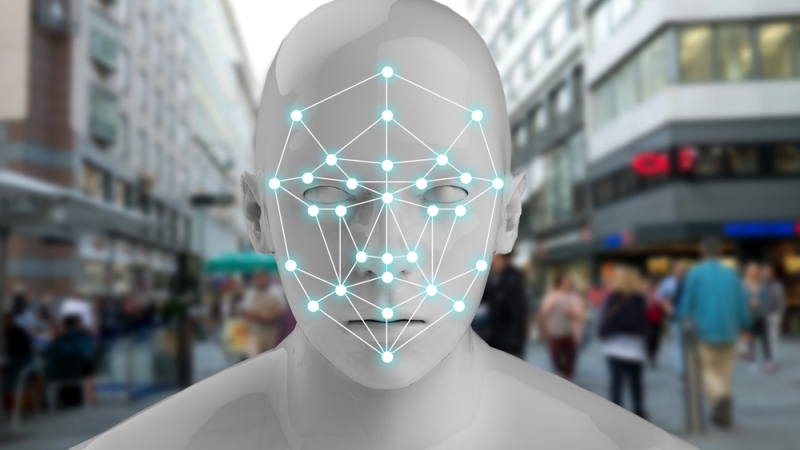

Brad Smith, Microsoft’s president and chief legal officer, today urged the U.S. and other governments to take legislative action to shape legal frameworks for the use of facial recognition technologies.

In a policy paper and a related speech at the Brookings Institution, Smith said, “we believe there are three problems that governments need to address” through legislation.

The first is the tendency of the technology “especially in its current state of development” to “increase the risk of decisions and, more generally, outcomes that are biased and, in some cases, in violation of laws prohibiting discrimination.”

In addition to bias impacts, he said governments should consider legislation to address the technology’s ability to “lead to new intrusions into people’s privacy,” and to “encroach on democratic freedoms” if the technology is used by governments to conduct mass surveillance.

“We believe it’s important for governments in 2019 to start adopting laws to regulate this technology,” Smith said, in order to get out ahead of where the technology will be years from now.

“The facial recognition genie, so to speak, is just emerging from the bottle,” he said. “Unless we act, we risk waking up five years from now to find that facial recognition services have spread in ways that exacerbate societal issues. By that time, these challenges will be much more difficult to bottle back up.”

“In particular, we don’t believe that the world will be best served by a commercial race to the bottom, with tech companies forced to choose between social responsibility and market success,” he said. “We believe that the only way to protect against this race to the bottom is to build a floor of responsibility that supports healthy market competition. And a solid floor requires that we ensure that this technology, and the organizations that develop and use it, are governed by the rule of law.”

“While we don’t have answers for every potential question, we believe there are sufficient answers for good, initial legislation in this area that will enable the technology to continue to advance while protecting the public interest,” Smith said. “It’s critical that governments keep pace with this technology, and this incremental approach will enable faster and better learning across the public sector.”

Smith also called on the tech sector to act on its own to create “safeguards” to address facial recognition technology, and said the company would begin implementing early next year six principles that will guide Microsoft’s development of the technology. Those six principles are:

“Fairness. We will work to develop and deploy facial recognition technology in a manner that strives to treat all people fairly.

Transparency. We will document and clearly communicate the capabilities and limitations of facial recognition technology.

Accountability. We will encourage and help our customers to deploy facial recognition technology in a manner that ensures an appropriate level of human control for uses that may affect people in consequential ways.

Nondiscrimination. We will prohibit in our terms of service the use of facial recognition technology to engage in unlawful discrimination.

Notice and consent. We will encourage private sector customers to provide notice and secure consent for the deployment of facial recognition technologies.

Lawful surveillance. We will advocate for safeguards for people’s democratic freedoms in law enforcement surveillance scenarios, and will not deploy facial recognition technology in scenarios that we believe will put these freedoms at risk.”

Smith said the company will publish more details about the principles next week, and “will work in the coming months to gather feedback and suggestions from interested individuals and groups on how we can best implement them.”