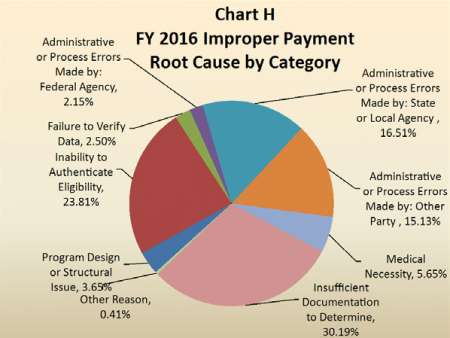

Federal agencies are hemorrhaging billions of dollars every year due to fraud, waste, and improper payments, according to the Government Accountability Office. And the problem may be getting worse, with the governmentwide improper payment rate reaching $144 billion in fiscal year 2016.

To put those percentages in perspective, GAO found that $44 billion of Federal improper payments in FY 2016 were caused by insufficient documentation. Another $34 billion were the result of the inability to authenticate eligibility, meaning an improper payment was made because agencies lacked databases or other resources to help determine someone’s eligibility status.

MeriTalk spoke with a wide range of private sector experts about how the government can begin to reverse this trend. All urged the government to embrace big data analytics as the only way to prevent improper payments before they occur.

“We need analytical techniques that are sophisticated, but easy enough to use so analysts performing investigations can access and combine these different data types,” said Alan Ford, director of Teradata Government Systems. “The more data that is made available for analysis, the better the chances that agencies can generate adequate levels of information to drive the detection of fraud, waste, and abuse.”

“Big data is an interesting area because it means there’s an overwhelming amount of stuff out there. It’s like a screen full of static and we’ve got to figure out what the image behind it is,” said Jake Freivald, vice president of marketing at Information Builders. “There’s a lot of benefit in having machines look at information that’s out there.”

“Catching an instance of fraud, waste, and abuse is beneficial, but a data-focused prevention strategy allows systems to move away from simply flagging singular abnormalities to linking information together in ways that uncover fraudulent networks,” said Bill Fox, vice president of Healthcare and Life Sciences at MarkLogic. “More relevant data means better analytics, better analytics means less false positives and negatives, which translates into the ability to spot suspicious activity closer to real time. While most fraud analytics tools can guide investigatory resources in the right direction, data remains the key to developing a holistic view that can evolve as new data sources become available–and as fraudsters adapt their techniques.”

While some large agencies have established data analytics programs, their efforts have had only a minor impact on overall Federal improper payments. For example, Treasury has established the Do Not Pay System of Records, and has begun analyzing data across agencies to identify potential duplicative benefit payments in programs with related missions and beneficiaries. The Centers for Medicare and Medicaid Services, Department of Defense, Department of Labor, and the Social Security Administration have also started analytics efforts to help reduce improper payments.

But by the end of fiscal 2015, the cumulative result of these Federal agency efforts amounted to stopping only $5.9 billion in improper payments.

“The government’s No. 1 priority is to get benefits to the citizen, and it should be. This focus, however, requires benefits agencies to distribute money as fast as possible with only a cursory review of the recipient in advance of payment,” said Jon Lemon, a solutions specialist at SAS Government. “Estimates show that for the most part government gets this right 98 to 99 percent of the time. An error rate of only 1 to 2 percent promotes this ‘pay and chase’ approach, but when dealing with the sheer volume of money distributed through these benefits programs–resulting in billions of dollars of improper payments–this needs to change. Integrating analytics into the pre-approval process will ensure that individuals are vetted before the payment is distributed.”

In their 2017 Fraud Survey, ACL revealed that Federal agencies underperform on fraud detection and reporting practices. The survey, which polled more than 500 private sector and government professionals, reflected that fraud in the Federal space cost taxpayers $136 billion each year. ACL also found that less than 50 percent of fraud occurring in Federal agencies is reported.

GAO said that compliance with the Digital Accountability and Transparency Act of 2014, which orders Federal agencies to establish data governance structures, would improve the accountability of Federal spending.

David Rubal, chief technologist at DLT, said that sweeping mandates, such as the DATA Act, are not as effective for individual agencies because every agency has different needs. He said agencies will adopt data analytics programs at their own pace based on their own needs.

“A mandate looks good on paper, but I don’t know if it’ll get cultural acceptance. Agencies will embrace data analytics at different levels based on the types of data they need,” Rubal said. “Agencies have been slow to adopt it because they’re used to doing things the old way. Getting insight into the right data and being able to inspect it is key. Instituting data analytics, they’ll be able to see immediate benefits.”

While GAO credits Treasury and OMB with taking significant steps in setting up data management practices, their report states that many agencies, such as the U.S. Postal Service, have work to do.

USPS is still in “serious financial crisis” this year as it reached its borrowing limit of $15 billion and finished FY 2016 with a net loss of $5.6 billion, GAO reports. Postmaster General Megan Brennan, who testified at a House Oversight and Government Reform Committee Hearing on Feb. 7, said the self-funded USPS is in need of legislative and regulatory reform.

“Less volume, limited pricing flexibility, and increasing costs mean that there is less revenue to pay for our growing delivery network and to fund other legally mandated costs,” Brennan said. “Our financial challenges are serious, but solvable.”

Freivald recommended mapping as an effective way to analyze big data. He said mapping where purchases take place is especially useful when trying to spot fraudulent transactions. For example, a small store with a higher level of purchases than the big store located right next to it could signify suspicious activity, Freivald said.

He also said visualization is sometimes more useful than a written report.

“It’s taking something important to spot in a report and visualizing what’s going on,” Freivald said. “We’re talking about big data, but we’re really trying to talk about small data.”

SAS’s Lemon said some of the key considerations for proper analytics capabilities include anomaly detection, predictive modeling, link analysis, and text analysis. “These are all techniques that can be used to generate more accurate, better-prioritized data query results; reveal hidden relationships and suspicious associations; and distinguish between legitimate and suspicious activity,” Lemon said. “Good data management is needed to clean, organize, and harmonize the data so it can be used to find fraud, waste, and abuse. Additionally, automation can be deployed to reduce manual errors and put into perspective connections that an individual simply could not process.”

Rubal said that one trend he has seen evolve at both the state and Federal level is the movement of data into the hands of citizens who use the data. Non-government workers, such as software designers and tech experts, can manipulate Federal data into engaging and navigable portals.

“Many agencies have established open data portals. That way, users can be more agile,” Rubal said. “This is the beginning of an era.”

Editor’s Note: This story has been updated to correct the name of ACL.