Technical Evaluation Process

The Technical Evaluation was comprised of three distinct elements. The first was the Critical Factor of whether the offeror proposed to perform all of the work involved with this combined Synopsis and Solicitation. If the offeror did propose to perform all of the work then the proposal was reviewed agASIt the remaining criteria. If, however, the offeror did not propose to perform all of the work then the proposal was not reviewed agASIt the additional criteria. The additional criteria included:

- 60% – Completeness of Plan and Approach

- 40% – Likelihood of Success

In the Completeness of Plan and Approach the offeror should describe how they intend to meet the requirements identified in the relevant section of the SOW.

The Likelihood of Success was broken down in the combined Synopsis and Solicitation into two parts, the past performance of the offeror as an organization in projects that are similar in both scope AND magnitude. The second part is the experience of key personnel in working on projects that are similar in both scope AND magnitude. These points were clearly articulated in Section 3.2 of the Synopsis and Solicitation in terms of how offerors would be scored.

The Evaluation Process

The Technical Evaluation Panel (TEP) completed their Organizational Conflict of Interest forms and sent them to the TEP Chair. One panelist, the Chair, had several connections with people listed as key in proposals and he made the rest of the panel and the Contracting Officer aware of. Those connections included a couple of former classmates from the offeror ABC, a couple of former colleagues from ESYS, previous experience at a different agency that was listed in the work cited by ASI, and contractors from previous projects that supported him from PAD and SAP. Those connections in no way clouded his judgment or affected the deliberations of the Panel. The Contracting Officer agreed.

The Technical Proposals were distributed to the TEP online. Each panelist reviewed the proposal independently and made comments using the comment features in Adobe Acrobat. Once complete, the panel convened to consider the process and make sure the panelists were consistently applying the criteria. Additional time was given for panelists to further consider proposals.

A total of 20 proposals were received. Of those, five did not propose to perform all of the work and were eliminated from further consideration. The TEP met to try to identify the top six or seven proposals. Instead, the top-tier proposals only included four proposals. The panel once again took time to consider the proposals and rank them.

Of the top four proposals the TEP was unanimous with the selection of the highest qualified technical proposal. The rank of the top four is as follows:

- DD

- PRO

- ABC

- ESYS

Individual Scoring

Failed to Propose for All of the Work

There were five proposals that did not propose to perform all of the work. They included:

- The BAV

- Point

- NEP

- GOS

- KAS

The BAV Consulting Group failed to include the development of the Web-based Training (Section 5.1.5 of the SOW) as well as the delivery of training sessions (Section 5.1.6 of the SOW), the delivery of Tier 2 Help Desk (Section 5.1.7 of the SOW), and the delivery of Technical Assistance (Section 5.2 of the SOW). Proposing to perform all of the work identified in the SOW was a critical factor in the combined Synopsis and Solicitation.

Point Solutions failed to understand that the Requirements Sessions (5.1.1) and QA Session (5.1.2) require Facilitation to be successful. The last sentence in each of these sections reads: “The contractor will facilitate these meetings.” The “Assumption” on page 13 of the proposal is that resources from the offeror are not required to be onsite. The panel concluded that the offeror did not propose to facilitate these meetings and consequently did not propose to perform all of the work identified in the SOW. Proposing to perform all of the work identified in the SOW was a critical factor in the combined Synopsis and Solicitation.

NEP failed to include the follow-on phased for PROGRAM and the balance of the Agency. Their proposal also neglected to include the Web-based Training Development (SOW Section 5.1.5), Training Sessions (SOW Section 5.1.6), Help Desk Support (SOW Section 5.1.7) or Technical Assistance (SOW Section 5.2). These were critical omissions from the offeror’s proposal.

GOS Inc. failed to include the development of the Web-based Training (Section 5.1.5 of the SOW) as well as the delivery of training sessions (Section 5.1.6 of the SOW) the delivery of Tier 2 Help Desk (Section 5.1.7 of the SOW) and the delivery of Technical Assistance (Section 5.2 of the SOW). Proposing to perform all of the work identified in the SOW was a critical factor in the combined Synopsis and Solicitation.

KAS Systems failed to include PROGRAM deployment in their solution. They also recommended the development of Test Cases and Scenarios in Task 1 of their proposal but it is not a commitment that the offeror is proposing to deliver those despite their inclusion in the Deliverables Table in Section 6.0 of the SOW. Additionally, Build Iterations were not proposed by the offeror despite their inclusion in Section 5.1.3 of the SOW. Proposing to perform all of the work identified in the SOW was a critical factor in the combines Synopsis and Solicitation.

Remaining Proposals

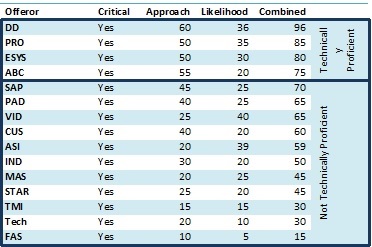

The offerors who proposed to perform all of the work were completely evaluated considering the proposed Completeness of Plan and Approach as well as the Likelihood of Success. The results of this evaluation are indicated in the table below.

The range for the 16 remaining proposals was from a low combined score of 15 to a high combined score of 96. The panel agreed that an offeror had to have a combined score of greater than 70 to be considered technically proficient. This separated the remaining proposals into two distinct groups, Technically Proficient and Not Technically Proficient.

Not Technically Proficient

Eleven Offerors were deemed by the TEP to not be technically proficient. As mentioned previously, before the TEP met to consider proposals for the second time, it was anticipated that six or seven proposals would be in the Technically Proficient tier. However when the TEP met to form the consensus understanding of the remaining proposals, only four of the 11 were found to be Technically Proficient. This was different from what was expected; however, the TEP affirmed that the top four were the only Technically Proficient proposals and that all others help higher degrees of risk and or lacked the completeness of approach that caused significant risk to the overall investment.

The offerors found not to be Technically Proficient include:

FAS’s proposal was simply a restatement of the SOW with no description of “How” the requirement would be met. The two resources identified as “Key” to this proposal lack experience performing work similar in nature and magnitude to this requirement and the organizational work cited as past performance, with DEF found to not be consistent with the work involved with this requirement.

Tech’s proposal included the COTS product, “NAME,” however the offeror failed to identify how using this product would translate into value for the government (scope, quality, value, etc.). The proposal had several spelling and grammatical errors which were found to either pose a risk in the offeror’s attention to detail or internal quality control process. Both of those posed some risk to the project. The offeror seemed to have copied and pasted significant portions of the SOW without identifying “How” the offeror is proposing to meet the requirements. The two proposed Key Personnel both have a lot of interesting and varied experiences, but neither are a close match to the scope of this requirement. Additionally, no organizational experience was cited which proved to be a significant weakness and accepted higher levels of risk.

TMI did not completely understand the requirement as seen by Section 1.1.1 of the proposal in which they consider the configuration of switches and hubs to be consistent with the requirement. The offeror built their proposal around some assumptions that were not consistent with the government’s requirement. None of the past performance cited by this offeror were found to be consistent with the scope or magnitude of this requirement. As such this completely limited both their approach scores and risk scores.

The STAR proposal lacked detail and specificity in terms of how they are proposing to meet the requirements. They provided a lot of information concerning what they are proposing, however they didn’t describe in detail how they are going to do it. For example, they indicate on page 3 of their proposal, “STAR will create Activity Diagrams, Use Case Models, and Use Case Narratives to identify the Normal Flow and as many reasonable Alternate Flows in an effort to gain consensus with stakeholders.” But they do not provide an approach describing “How” they plan to accomplish this. This deficiency resulted in a score of 25 out of a possible 60 in their approach. This offeror failed to identify any “Key” personnel as identified in Section 3.2 of the combines Synopsis and Solicitation. As such the TEP was not able to score any points for that aspect of the review resulting in a score of 20 for that section.

The MAS proposal had several strengths including a schedule compression that was the result of their Agile methodology. Additionally they proposed an extra deliverable, the UML artifact of the State-Machine diagram which was considered to be a value-adding element of the proposal. Among the weaknesses of the proposal are that the UAT Plan is projected to be delivered very late in the process, immediately before User Acceptance Testing. That, however, is easily correctable. The bigger issue was in proposal Sections 1.2.1.2 and 1.2.1.3, the QA and Build Sections. This is a SharePoint application development project and there was only a cursory discussion of the nuances or specifics of SharePoint. There was no discussion about the risks posed by the platform or how to overcome them. This lack of SP application detail was punctuated by the panel’s perception concerning the personnel identified as “Key” to this acquisition. John Doe does not seem like a good match in terms of scope or magnitude. Deere has a lot of SharePoint architecture experience but not a lot of SharePoint development experience. In this requirement the architecture is already in place, the agency needs the application. As such he is not as good a fit. Jane Doe does not have SP experience. No person on the list of key personnel has any experience in terms of document management, content management or workflow development. These were significant deficiencies in the proposal.

The IND proposal had numerous spelling errors regarding the agency. On the cover letter page they call the agency by the wrong name. This indicated a lack of attention to detail and/or lacking an internal quality control process. This offeror is significantly limiting the in-person work. While that is to be expected, the SOW (Section 5.1.1 and 5.1.2) was explicit in terms of facilitation for the requirements and QA meetings. This is a significant weakness of the proposal. The offeror proposed a lot of effort on the architecture side, which is out of scope for this requirement. This requirement is on the application side. The assumption should have been that the architecture to support the solution will be there. Page 7 of the proposal indicates a DR/backup plan which is out of scope for this requirement, and only makes sense if you are proposing for the system and not the application. It appears that the offeror does not understand the requirement. The second table on page 7 is the DR/backup plan again instead of the training plan. This is further evidence concerning how detail oriented the offeror is and the internal quality control processes the offeror is able to bring to bear on this project. Finally, none of the personnel proposed as Key on this project have any content management, document management, or workflow development experience.

ASI proposed a very strong team of people and had outstanding past performance that was found to be consistent in scope and magnitude. Content management, document management, workflow development along with SharePoint application development were all included in the key personnel past performance and organizational past performance. ASI received the second highest rating of all offerors in the Likelihood of Success category. However, the offeror demonstrated significant weaknesses in the proposal as well. On the cover page they refer to the department incorrectly and on page 4 they refer to the agency incorrectly. These errors caused the TEP to be very concerned with how detail oriented the offeror can be as well as question the internal quality control processes they are able to bring to bear on this project. On the top of page 4 the proposal identifies that this requirement has a “very high risk of failure.” The panel completely disagrees with this assessment Section 3.2 of the combined Synopsis and Solicitation indicates that the evaluation will be based on the approach to “project management constraints of schedule, quality, scope, risk, and customer satisfaction.” The TEP disagreed with the assessment of the offeror and agreed that the offeror’s risk management processes are not consistent with those of the Agency. Finally, the COTS product proposed was identified as an important value add by the offeror, however the TEP did not see how the value of this COTS product was realized by the government in this acquisition. Specifically, there was no impact from using the product to schedule, quality risk, scope, etc.

The proposal submitted by CUS is generally good, but the TEP agreed that it lacks a firm commitment in many places. A good example of this is found in Section 3.3.1 of the proposal in which it states, “In addition to the built-in search functionality of MOSS, we can build a custom advanced search facility that will enable the users to search with additional parameters, like review due date, requests from a particular agency, etc., that will improve user experience.” In this sentence the offeror is identifying a capability, however is not committing to deliver that as a product or a service (we can build vs. we will build). These types of expressions of capabilities were sprinkled throughout the proposal. The proposal lacked some detail concerning the intricacies and nuances of SharePoint. For example, Section 3.3.1 of the proposal indicates a risk in one of the requirements, concurrent review, however the TEP agreed that the risk wasn’t mitigated in the description in the proposal. The fourth risk in that section identifies that writers will have the capability to decline an assignment, but the risk the Agency is seeking to avoid is the situation in which it has been assigned to a writer and he or she then is away on sick leave or other reasons. The agency must have a mechanism that allows for an efficient reassignment of the high-priority work to another resource. As such the panel agreed that there is a lot of good thought here, but some of it needed more consideration to have a complete approach. Only one of the past performance projects was consistent with this requirement in terms of scope and they were not a close match in terms of magnitude. None of the key personnel proposed has document management, content management, or workflow development experience which is core to this requirement.

VID proposed a COTS product that will result in a shorter schedule. The offeror’s past performance was found to be successful and the projects were found to be consistent with the scope and magnitude of this requirement. The key personnel all seem to have strong experience working on projects with a similar scope and magnitude of the current requirement. As such VID received the highest rating on the likelihood of success criterion. However, the methodology in proposal sections 2.2.3 and high-level plan in 2.2.4 restate a lot of the SOW, but do not offer an approach to how VID is proposing to actually perform the work. They state that it will be performed, but it lacks the “How” that work will get done. As a result the TEP agreed that they did not provide a strong approach based on Section 3.2 of the Combined Synopsis and Solicitation. Additionally, there is an issue in sections 3.2.4 and 3.2.5 of the proposal in which the content seems to have been duplicated. This reflected poorly on the quality control processes the offeror can bring to the project.

PAD identifies at the beginning that they will be using a COTS tool, but it is never named. This was seen as importing a little risk to the proposal because the licensing and maintenance of the COTS tool is an unknown. The offeror claims that SharePoint can never really be 100 percent Section 508 compliant, but there is a VPAT for SharePoint MOSS 2007, which seems to be consistent with the government’s requirement. The proposal is very process-intensive, but less SharePoint-specific. There is no doubt that the offeror is a CMMI level 3 type of organization, process is complete. But what is lacking is the specific nuances in terms of SharePoint implementation. Jane Dough’s experience, internal SP development seems like a good match to this work; the TEP agreed that she was a strength of the proposal. The JAS Application, while successful is not similar to the current requirement. The OTHER PROJECT also seems quite different. Philips also seems quite different. As such none of the organizational past performance is consistent with the scope of the current requirement. The key resource, Smith, seems like he has good experience, but he seems to be centered in sales of technical solutions rather than developing solutions. The panel could not understand the relevance of selling $11 million here and $3 million there. The government is not looking for someone to try to sell us stuff, we just want to get some work done.

In evaluating the proposal from SAP, the TEP agreed that there was a strong Project Management component built into the proposal. Additionally the offeror’s approach to training development seems well conceived. But in combing through the details some inconsistencies were noted. Specifically, in Section 2.2.2 the offeror claims that it is in the government’s best interest to leverage the native capabilities of SharePoint. But in the very next section, 2.2.3, they propose a great deal of custom code. This was seen as an inconsistency in their approach. Additionally, for the Help Desk Support (SOW Section 5.1.7) the offeror is proposing tier 1 support, which is different from the requirement in which they help the Tier 1 team to build up their capabilities in addressing the issues from the deployment of this application. While the previous project is a close match to the up-front work, Section 3.1.2 of the proposal was found to not be similar to the government’s requirement. Section 3.1.3 of the proposal has a lot of workflow work, but the offeror didn’t propose .Net Visual Studio Workflow Designer. Section 3.1.4 of the proposal is more architecture-based than development based, which is interesting but not a close match to the work of this requirement since the architecture is already established. One of the key personnel proposed is extremely strong especially in SharePoint Architecture, however that aspect is out of scope for this work, and he was not considered to be a value-adding part of the proposal since the architecture is already complete. The resource identified as a specialist in workflow seems qualified for that area; however, she doesn’t have SharePoint experience indicated. That posed some risk.