In recent years, Federal Agencies have been challenged by a growing list of adversaries operating in an increasingly complex cyber threat landscape. At the same time, Agencies have been diligently modernizing their information technology (IT) environments to accommodate evolutionary cloud technology trends and a more mobile and remote workforce. These dynamics have combined to create a complex and seemingly insurmountable challenge in securing and protecting the systems, infrastructure, data, information, and personnel representing our nation’s most critical and sensitive assets.

In the cybersecurity domain, Agencies are facing a myriad of limitations and constraints across both business and technical challenges. Effectively securing mission critical assets is paramount against a landscape of constrained funding, talent shortages, cyber skills gaps, and an overwhelming regulatory and compliance framework. The fast pace of technology evolution and urgent demands for decisive advantage in the cybersecurity domain to combat determined adversaries has resulted in complex operational IT environments with disjointed and siloed security architectures. In many Agencies, security teams are flooded with data, alerts, and indicators without the ability to properly analyze, make sense of, and act upon the insights and information available to them. Un-protected attack surfaces, security blindspots, and exposed vulnerabilities remain throughout the environment and are prime targets for malicious actors. Deployments of common, baseline solutions with manual analysis and process workflows have not proven effective against today’s adversaries.

While the cyber threat landscape continues to outpace defenses, federal IT and OT environments are also going through evolutionary change. Recognizing the performance, innovation, speed, and scale advantages of cloud platforms and solutions, Agencies are actively moving more sensitive and critical applications, data, and information to cloud environments. With a more mobile and remote workforce, Agencies have had to rethink and re-architect their IT capabilities to deliver more distributed capabilities resulting in a broadening of attack surfaces and increase in risks across the IT estate. The architectural changes occurring across the federal IT and OT landscape demand equally evolutionary change to the security and protections deployed to secure our federal assets and information.

The current geopolitical environment, with multiple overseas conflicts that threaten U.S. interests on the world stage, pits the United States against technology advanced nations and demonstrates for the first time in history the importance of cybersecurity and technology capabilities and dominance.

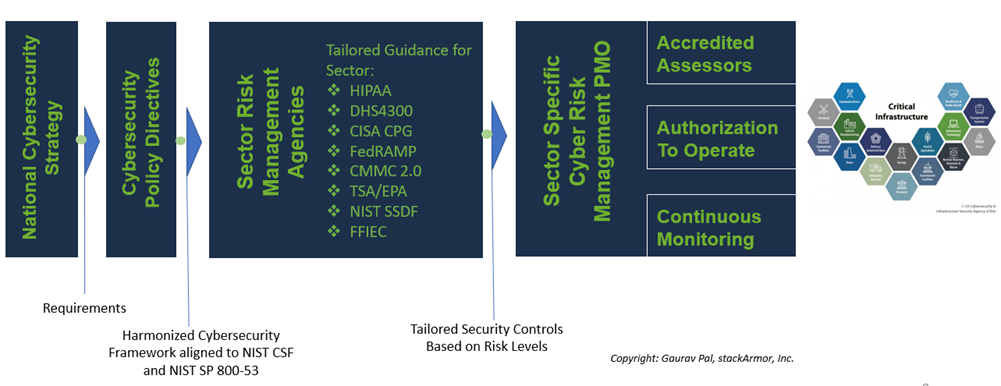

To address these challenges, the President’s Executive Order on Cybersecurity (EO14028) seeks to drive urgent and immediate change throughout federal government to modernize and improve the security posture of the nation’s IT infrastructure, foster robust threat and information sharing, enhance visibility of vulnerabilities and exposures, and improve investigative capabilities to automatically protect, detect, and respond to malicious activities, incidents, and events. To support this order, current OMB Memorandum (M-21-31) directs agencies through a maturity model aimed at not just collecting and storing log data for visibility, but also evolving and applying AI/ML and Behavior Analytic capabilities to enable orchestrated, automated, and comprehensive protective response actions. The Event Logging 3 Tier, at an advanced level, provides for the rapid and effective detection, investigation, and remediation of cyber threats as decisive actions against modern adversary tactics, techniques, and procedures (TTPs).

At SentinelOne, we are uniquely positioned to help Agencies tackle these problems and combat our most aggressive and malicious adversaries. The SentinelOne Singularity Platform is the only FedRAMP Authorized solution empowering centralized security operations in a world of big-data and decentralized IT. Built for the high performance, high scale demands of our Federal Agencies, our platforms and services offerings are leading the way for Agencies to fully leverage the power of AI/ML, deep analytics, and autonomous enforcement technologies. Combined with an extensive XDR Ecosystem of seamless partner integrations for estate-wide context and enforcement, SentinelOne is a powerful toolset that Agencies are leveraging for real results on the cyber battlefield.

SentinelOne | Singularity Platform

Delivers industry leading, autonomous protection, detection, and response across attack surfaces.

The SentinelOne Singularity Platform delivers a single, unified console to manage the full breadth of AI powered, autonomous cybersecurity protection, detection, and response technologies for all-surface protection. Our cloud-native technologies deliver performance at scale with unique and patented endpoint activity monitoring and remediation capabilities. Our Storyline Active Response (STAR), powered by AI/ML technologies, is foundational to improving investigative visibility and delivering automated response actions against modern day attacks. With full rollback as a remediation action, SentinelOne is leading the way in comprehensive, rapid, and effective recovery of assets compromised from ransomware and other malicious actions. The broad endpoint coverage, robust feature set, multi tenant architecture, and scalable infrastructure offered by the SentinelOne Singularity platform are unmatched in helping Agencies meet the requirements of Executive Order 14028.

SentinelOne | Security DataLake

Provides unmatched cross-platform security analytics and intelligence with scalable, cost-effective, long-term data retention.

The SentinelOne Singularity Platform, and underlying Security Data Lake, is the industry’s first and only unified, M-21-31 data repository that fuses SentinelOne and 3rd Party security data for active threat hunting, deep-dive analytics, and autonomous response and enforcement all from a single unified console. The unified data repository is built to deliver performance at scale leveraging unique technologies that combine to dramatically reduce the time required for complex, large-scale investigative queries. With built-in, AI-driven analytics, the Security Data Lake empowers security analysts with powerful capabilities, reducing the mean times to detect, identify, and respond to threats discovered and exposed in security log and event data. With scalable, cost-effective, long-term data retention and robust AI powered analytics, the SentinelOne Security DataLake is unmatched in delivering the capabilities needed for Agencies to reach EL3 maturity and M-21-31 compliance.

SentinelOne | Security XDR Ecosystem

Enhances threat intelligence and information sharing with open, bidirectional integrations across your security stack for extended threat enrichment and enforcement.

The SentinelOne Singularity XDR Ecosystem is the leading cloud-first security platform that enables organizations to ingest and centralize all security data while delivering autonomous prevention, detection, and response at machine speed across endpoint, cloud workloads, identity, and the extended 3rd party security ecosystem. With “one-click”, multi-function integrations, SentinelOne is delivering better detection and response, greater actionability, and improved workflows while protecting existing security investments and preserving cybersecurity talent and skills with a unified, consistent, and intuitive interface spanning your security environment. As an open platform for sharing threat information, enabling deep visibility, applying robust security analytics across large-scale datasets, and driving autonomous response, the SentinelOne Singularity XDR Ecosystem stands alone in providing the end-toend, seamless architecture and services for Agencies to meet the objectives of both Executive Order 14028 and OMB Memorandum M-21-31.

SentinelOne | Purple AI

Empowers cybersecurity analysts with AI-driven threat hunting, analysis, and response through conversational prompts, interactive dialog, and easy to understand analysis and recommendations.

SentinelOne continues to lead the industry in the innovative use of AI/ML technologies to empower more streamlined and effective SOC performance and response. Our latest innovations demonstrate the powerful use cases for generative AI technologies to better equip SOC analysts to easily search, understand, and gain actionable insights from extensive data stores without the need to learn complex query and programming languages. Our recently announced Purple AI will deliver seamless integration of generative AI technologies allowing SOC analysts to use conversational, plain language queries and prompts to perform complex and deep analysis for both known and unknown threats. Cybersecurity is a “big-data” problem and our Purple AI capabilities are being designed to simplify threat hunting and analysis, expose meaningful and contextualized data, and deliver insightful and actionable recommendations. SentinelOne Purple AI will enable less-experienced and resource constrained security teams with the tools necessary to respond to attacks faster and easier in alignment to the Executive Order 14028 and OMB Memorandum M-21-31.

SentinelOne is proud to partner with our federal government agencies in the fight against advanced adversaries seeking to compromise our national security. We are committed to delivering innovative and impactful cyber technology solutions that allow federal agencies to keep up with a growing list of cyber adversaries operating in an increasingly complex cyber threat landscape. The SentinelOne Singularity Platform, underlying Security DataLake, integrated XDR Ecosystem, and emerging Purple AI enhancements are uniquely designed to arm federal organizations with the tools they need to automatically identify and respond to threats in real-time and reduce the burden on understaffed and overloaded SOC teams.

For more information on the SentinelOne portfolio, emerging innovative technologies, and how SentinelOne can arm your organization to win the cyber wars, please contact us at s1-fedsquad@sentinelone.com.